| Reliability engineering |

Design for reliability

Design For Reliability (DFR), is an emerging discipline that refers to the

process of designing reliability into products.

This process encompasses several

tools and practices and describes the order of their deployment that an

organization needs to have in place in order to drive reliability into their

products. Typically, the first step in the DFR process is to set the system�s

reliability requirements. Reliability must be "designed in" to the system.

During system

design, the top-level reliability requirements are then allocated to

subsystems by design engineers and reliability engineers working together.

Reliability design begins with the development of a

model. Reliability models use block diagrams and fault trees

to provide a graphical means of evaluating the relationships between different

parts of the system. These models incorporate predictions based on parts-count

failure rates taken from historical data. While the predictions are often not

accurate in an absolute sense, they are valuable to assess relative differences

in design alternatives.

One of the most important design techniques is

redundancy. This means that if one part of the system fails, there is an

alternate success path, such as a backup system. An automobile brake light might

use two light bulbs. If one bulb fails, the brake light still operates using the

other bulb. Redundancy significantly increases system reliability, and is often

the only viable means of doing so. However, redundancy is difficult and

expensive, and is therefore limited to critical parts of the system. Another

design technique, physics of failure, relies on understanding the

physical processes of stress, strength and failure at a very detailed level.

Then the material or component can be re-designed to reduce the probability of

failure. Another common design technique is component

derating:

Selecting components whose tolerance significantly exceeds the expected stress,

as using a heavier gauge wire that exceeds the normal specification for the

expected

electrical current.

Many tasks, techniques and analyses are specific to particular industries and

applications. Commonly these include:

-

- Built-in test (BIT)

-

Failure mode and effects analysis (FMEA)

- Reliability simulation modeling

-

Thermal analysis

- Reliability Block Diagram analysis

-

Fault tree analysis

- Sneak circuit analysis

- Accelerated Testing

- Reliability Growth analysis

- Weibull analysis

- Electromagnetic analysis

-

Statistical interference

Results are presented during the system design reviews and logistics reviews.

Reliability is just one requirement among many system requirements. Engineering

trade studies are used to determine the

optimum balance between reliability and other requirements and constraints.

Reliability testing

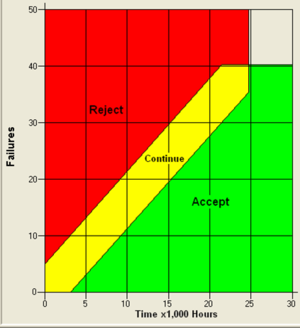

A Reliability Sequential Test Plan

The purpose of reliability testing is to discover potential problems with the

design as early as possible and, ultimately, provide confidence that the system

meets its reliability requirements.

Reliability testing may be performed at several levels. Complex systems may

be tested at component, circuit board, unit, assembly, subsystem and system

levels. (The test level nomenclature varies among applications.) For example,

performing environmental stress screening tests at lower levels, such as piece

parts or small assemblies, catches problems before they cause failures at higher

levels. Testing proceeds during each level of integration through full-up system

testing, developmental testing, and operational testing, thereby reducing

program risk. System reliability is calculated at each test level. Reliability

growth techniques and failure reporting, analysis and corrective active systems

(FRACAS) are often employed to improve reliability as testing progresses. The

drawbacks to such extensive testing are time and expense.

Customers may choose to accept more

risk by eliminating

some or all lower levels of testing.

It is not always feasible to test all system requirements. Some systems are

prohibitively expensive to test; some

failure modes may take years to observe; some complex interactions result in

a huge number of possible test cases; and some tests require the use of limited

test ranges or other resources. In such cases, different approaches to testing

can be used, such as accelerated life testing,

design of experiments, and

simulations.

The desired level of statistical confidence also plays an important role in

reliability testing. Statistical confidence is increased by increasing either

the test time or the number of items tested. Reliability test plans are designed

to achieve the specified reliability at the specified

confidence level with the minimum number of test units and test time.

Different test plans result in different levels of risk to the producer and

consumer. The desired reliability, statistical confidence, and risk levels for

each side influence the ultimate test plan. Good test requirements ensure that

the customer and developer agree in advance on how reliability requirements will

be tested.

A key aspect of reliability testing is to define "failure".

Although this may seem obvious, there are many situations where it is not clear

whether a failure is really the fault of the system. Variations in test

conditions, operator differences,

weather, and

unexpected situations create differences between the customer and the system

developer. One strategy to address this issue is to use a scoring conference

process. A scoring conference includes representatives from the customer, the

developer, the test organization, the reliability organization, and sometimes

independent observers. The scoring conference process is defined in the

statement of work. Each test case is considered by the group and "scored" as a

success or failure. This scoring is the official result used by the reliability

engineer.

As part of the requirements phase, the reliability engineer develops a test

strategy with the customer. The test strategy makes trade-offs between the needs

of the reliability organization, which wants as much data as possible, and

constraints such as cost, schedule, and available resources. Test plans and

procedures are developed for each reliability test, and results are documented

in official reports.

|